Introduction

The first electrophysiological recordings were performed in 1949 by Gilbert N. Ling and Ralf W. Gerard, who developed microelectrodes allowing them to perform intracellular recordings of the membrane potential of a frog's muscle. In 1960, micropipettes filled with liquid were used by Alfred Strickholm. They had the tiny tip diameter on a scale of several micrometers and were used for extracellular recordings from muscle cells. In the 50 years to follow, the setup for electrophysiological recordings did not change much, and the majority of work in the field is performed with small number of electrodes, allowing to record from small subsets of neurons in a single brain region. It was only in 2016 that a new generation of electrophysiology probes got developed by collaborative effort of scientists in the laboratories in Janelia Research Campus, UCL, Allen Institute for Brain Science and some other institutions. Those revolutionary probes are called NeuroPixel; they have ~1,000 recording sites, with an opportunity to record from 384 distinct recording channels simultaneously, across the different regions in the brain. Another probe, called NeuroSeeker, was developed as well, allowing to record from nearly 1500 channels at once. This, of course, opens completely new research avenues, such as studying how microcircuits process stimulus, work on planning the upcoming action, and how different layers and even regions in the brain interact with each other. We performed one of our recordings with NeuroPixel probe, and another one with NeuroSeeker probe.

Creating an auditory stimulus

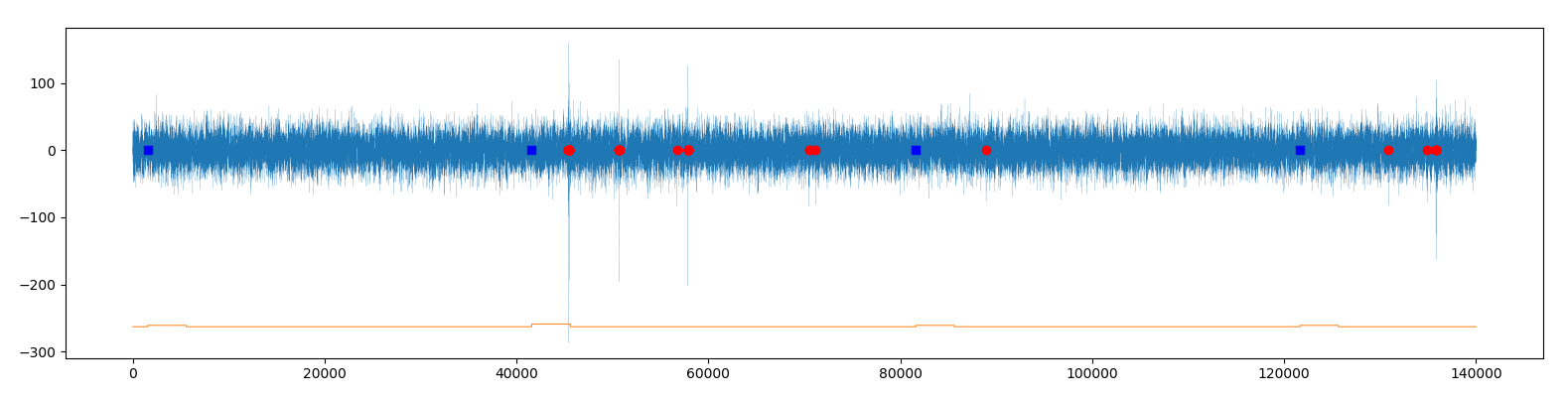

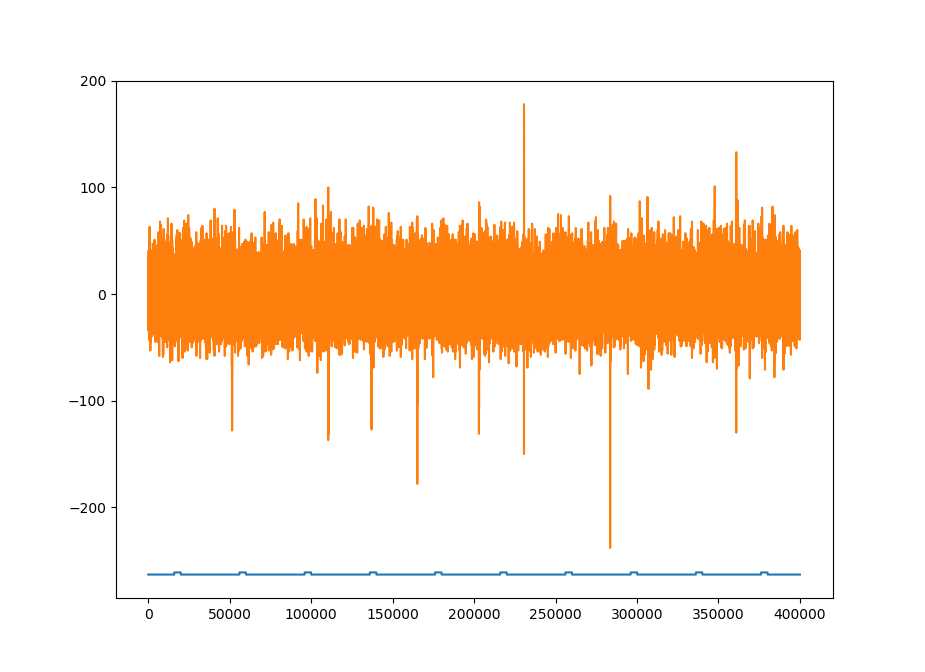

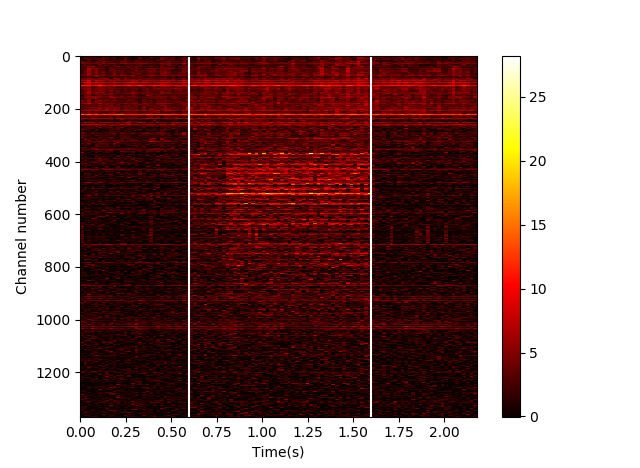

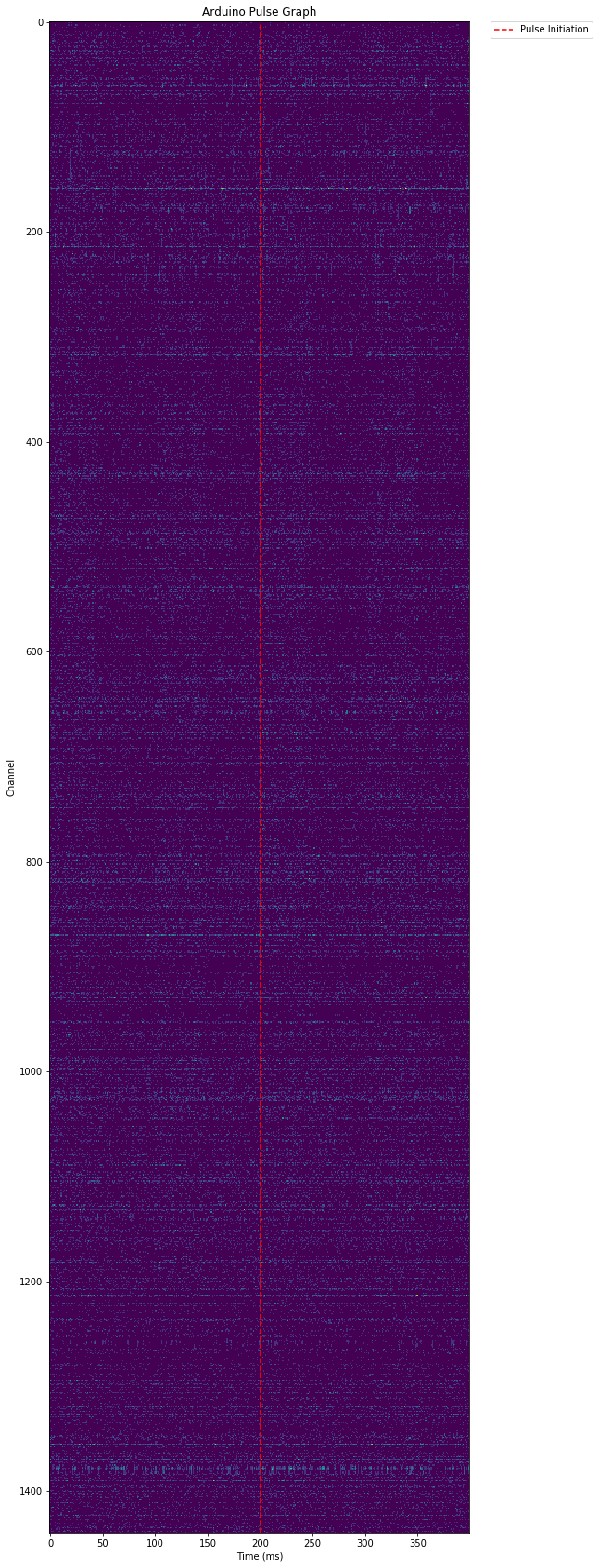

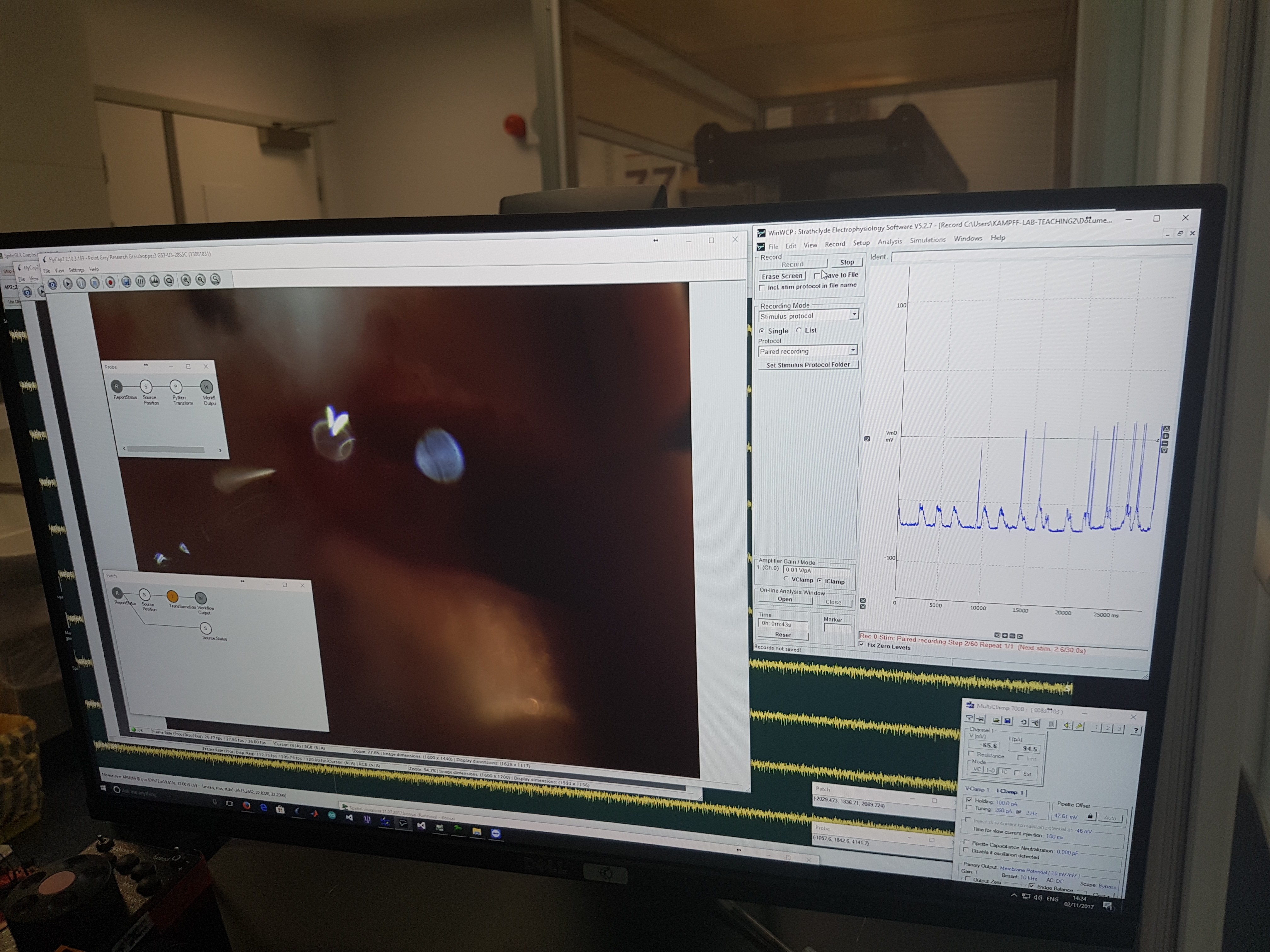

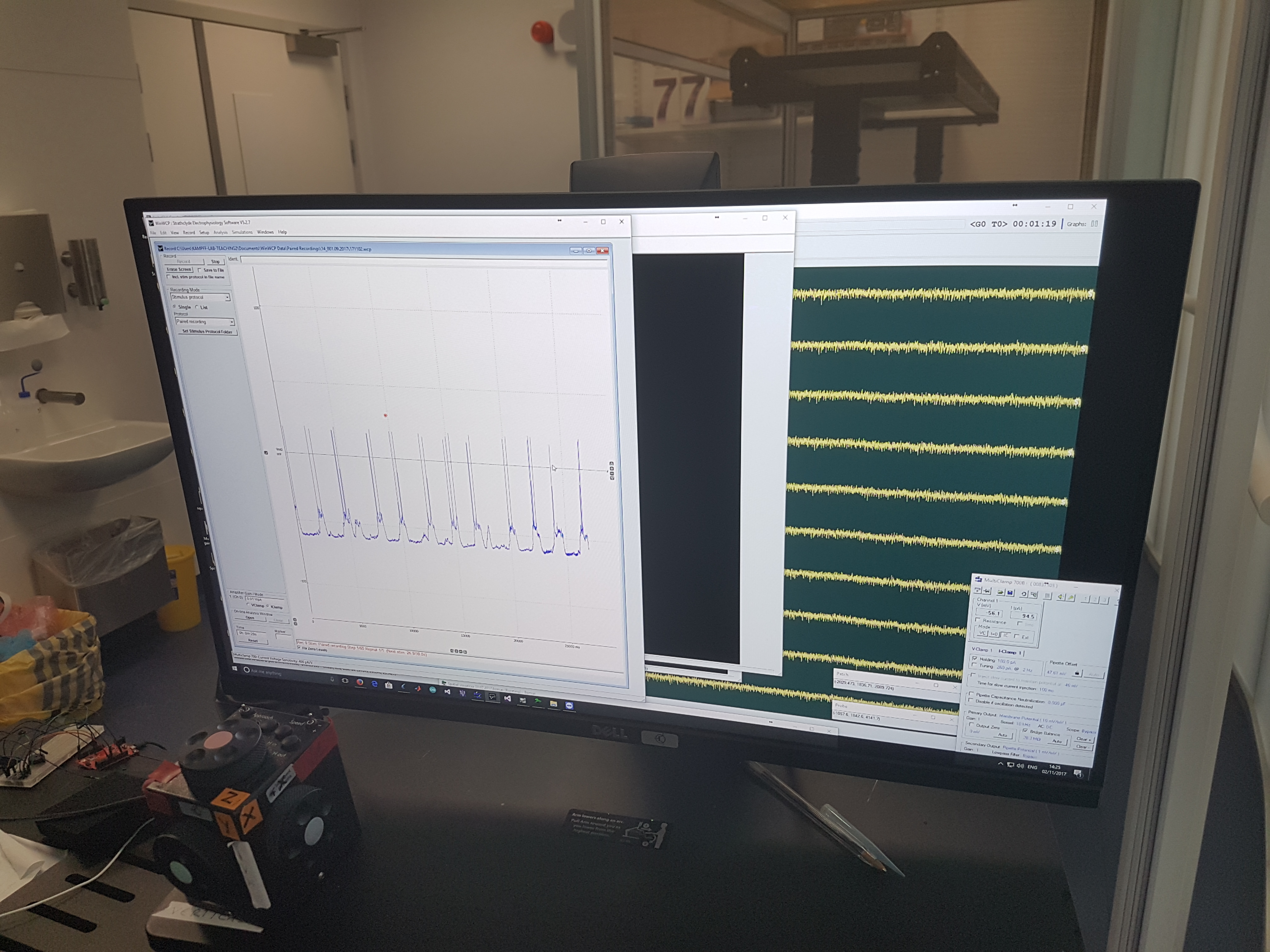

The idea behind our experiments is that we wanted to discover activity in the motor cortex, associated with auditory stimuli. In order to do so, we have developed a paradigm, where we play a one-second tone of a certain frequency (20 kHz), which is within rat's hearing range, every two seconds. Then, after a random number of trials, which is around 50 times, we switch to a tone of 20Hz, which is within human, but not rat's hearing range. After playing this unexpected tone a couple of times, we switch back to the expected 20 kHz stimulus and repeat the procedure. We expect to see activity in the rat's motor cortex, associated with the 20 kHz sound, so that the number of spikes will be different during the tone presentation as compared to the baseline. We also expect that when we play the 20Hz stimulus, the brain activity will be significantly different from either baseline or the activity during the 20 kHz tone. In order to be able to analyse our data, and synchronize it with the stimulus that we were presenting, we sent synchronization pulses, called TTL pulses, as we were playing the sound. We utilized different pulses for two types of tones that we played. We tested that our paradigm works when Andre Marques-Smith, postdoctoral researcher from Kampff lab, was doing his experiment with NeuroPixel probe. After making sure that our stimulus works, we proceeded to the NeuroSeeker experiment performed by George Dimitriadis, postdoc in Kampff lab. He recorded brain data for 20 minutes using our paradigm.

Building our own motor

When inserting the electrical probe into the brain, manually operating axes of the manipulator can be laborious and the speed of descending the probe can vary a lot. Therefore, we decided to improve our manipulator that can lower the electrical probe automatically. As our first product, we equipped a manipulator with a motor for Z-axis. The equipment was 3D-printed and made of plastic, with several components including a holder to clamp our shaft, a carrier for the motor and two gears to transmit the motion. Our designer Federico said “the most difficult part to DIY our manipulator set is to have a design that can hold the motor and clamp the shaft nicely and stably. While rotating the gears, the gears wiggle from times to times. What we want to improve from here is to make this manipulator set more stable.”

Making a code for the motor

When moving the stereotaxis, this manipulator is connected to a computer where Bonsai, a event-driven, asynchronous environment, reads the value of how much stereotaxis moves. With this, we can quantify and digitalize the position of the electrical probe. Furthermore, we used Arduino to trigger the motor for the manipulator. We planned to program a feedback loop which generates commands to the motor and sends the information about the position of the electrical probe back to the computer to update the position. Bonsai then compares the value from the stereotaxis with the expected value (based on remaining distance and time to run). Having this update every time can correct the errors the motor makes and make our automated stereotaxis system precise. Our engineer Francesca said “This is not a trivial task. Bonsai is an event-based environment, therefore; we needed to program a loop that is compatible with each event Bonsai receives and initiate the next command by Arduino.”

Data analysis

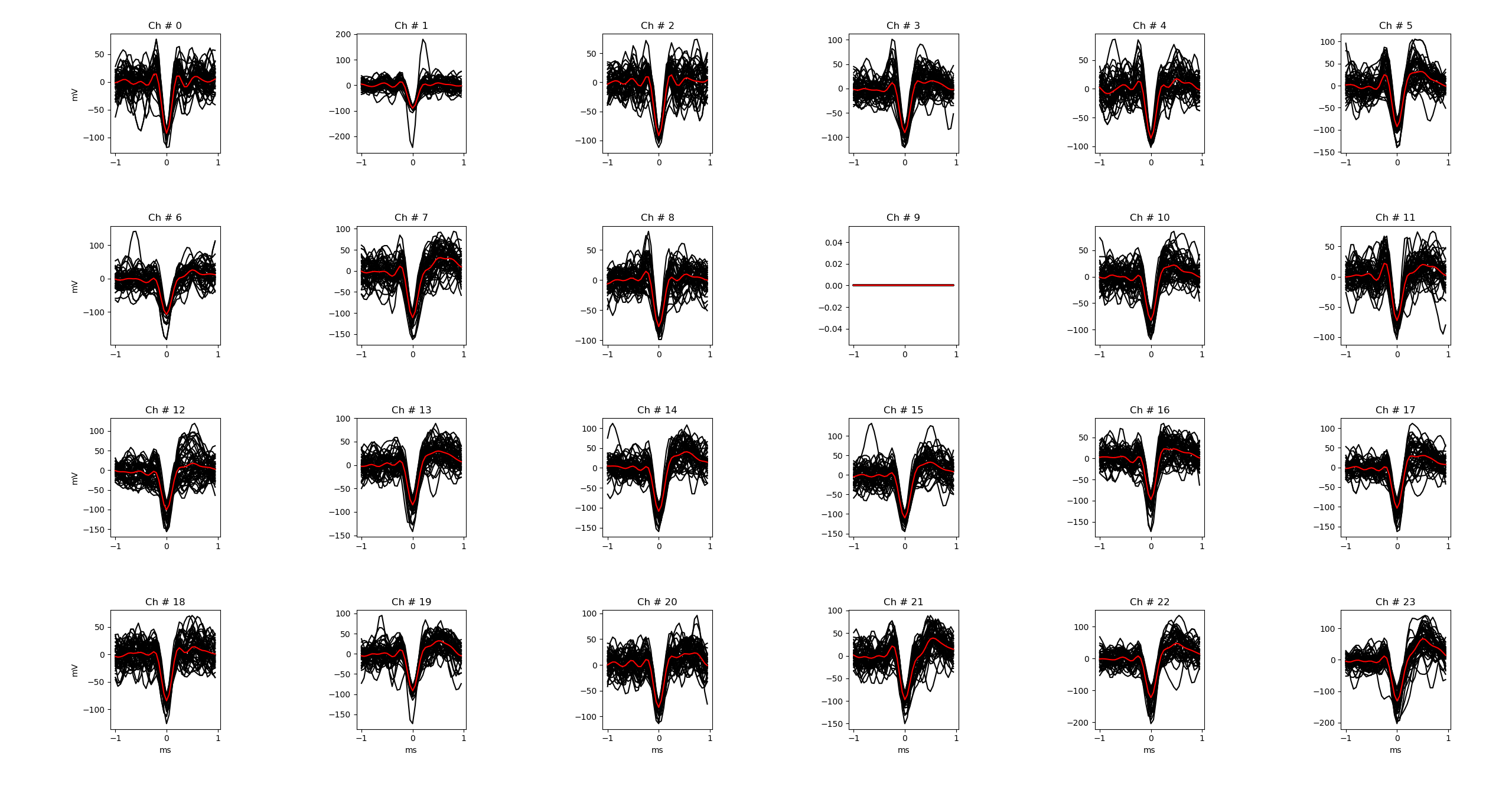

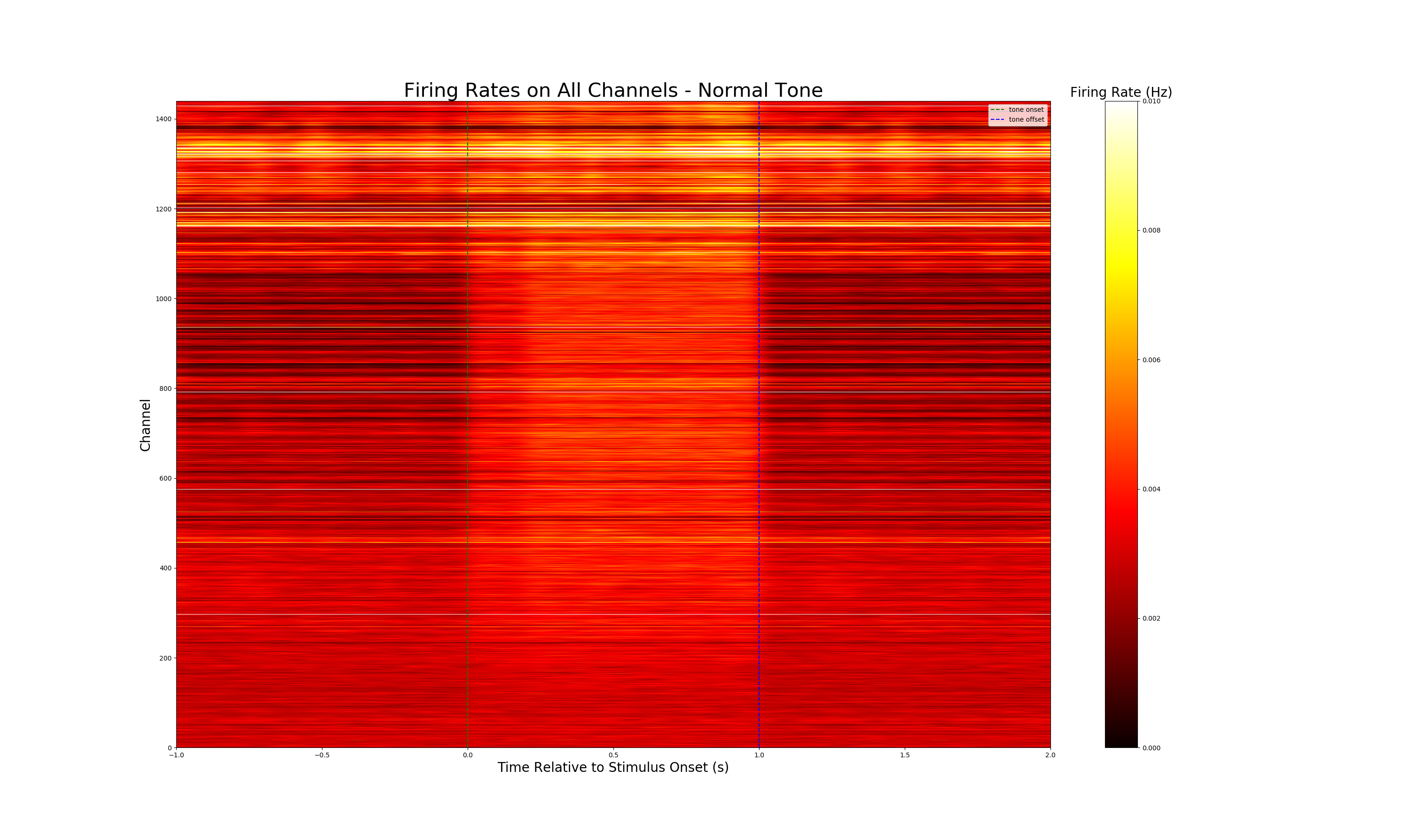

First, we extracted data by using a Bonsai program made by Kampff lab. Then, each of us analyzed the data by creating an analysis script from scratch in Python. We extracted the spikes from all the channels, and produced a heatmap of activity, relative to the stimulus onset.

Results